Folding @ Quarantined Home

/Recently, Bridge Fusion Systems acquired two modestly equipped server machines to beef up the local network infrastructure at the office. They are in the process of being configured for this purpose, but in the meantime we’ve found an interesting use for them, in response to the current global pandemic due to COVID-19. You don’t have to have a server machine to participate in this effort. Some of Bridge Fusion Systems’ employees have also joined the effort with their personal computing hardware. The PC or Mac that you have sitting around most of the time can participate too.

I first heard about distributed computing probably in college, now some two-digit years ago. Normally, a computer executes programs locally for its own user(s). With distributed computing projects, donors volunteer computing time from personal computers to a specific cause. SETI@Home was the first project of this kind that really popularized this genre in a viable way, launched in May 1999. It provided a mechanism for distributed computers to collaboratively search radio signals from space for signs of alien intelligence. I think I fired up a SETI@Home client for a few weeks before losing interest.

In a more recent incarnation of distributed computing (by about 1.5 years), Folding@Home has risen in popularity due to its contributions to efforts in modeling the COVID-19 virus and its protein interactions. When the project maintainers announced this effort to combat the coronavirus, the public quickly mobilized behind the project. We at Bridge Fusion heard this call, and the following is the documentation of our efforts to put our servers to use in this way.

Folding@Home Headless Installation

Setting up Folding@Home on a computer with a Graphical User Interface (GUI) is usually rather straightforward. Download the program, run it, complete the installation wizard, and you're good to go. However, the server machines are running a "headless" operating system, which means they have no GUI. That said, the Folding@Home documents made this pretty easy too. I created a new container on one of the servers, and ran the following commands:

wget https://download.foldingathome.org/releases/public/release/fahclient/debian-stable-64bit/v7.5/fahclient_7.5.1_amd64.deb

dpkg -i --force-depends fahclient_7.5.1_amd64.debAt this point, the Folding@Home (F@H) client was running, waiting to receive a Work Unit (WU) from one of the central coordinator servers. The F@H client provides an interface to its controls and status via a web service, hosted by the client itself. However, the default behavior of the client is to only allow access to its control page from the local machine. This presents a difficulty for a machine without a GUI! To allow access to the control page from another computer, the F@H client's config.xml file had to be changed.

First, stop the F@H Client:

systemctl stop FAHClientThen, edit the config file:

vi /etc/fahclient/config.xmlI changed the 'allow' and 'web-allow' tags to allow access from any IP address (any other computer on the same network):

<config>

<!-- Folding Slot Configuration ->

<gpu v='false'/>

<!-- HTTP Server ->

<allow v='0/0'/>

<!-- Slot Control ->

<power v='full'/>

<!-- User Information ->

<team v='243174'/>

<user v='BFS_fah1'/>

<!-- Web Server ->

<web-allow v='0/0'/>

<!-- Folding Slots ->

<slot id='0' type='CPU'/></config>Then, restart the client:

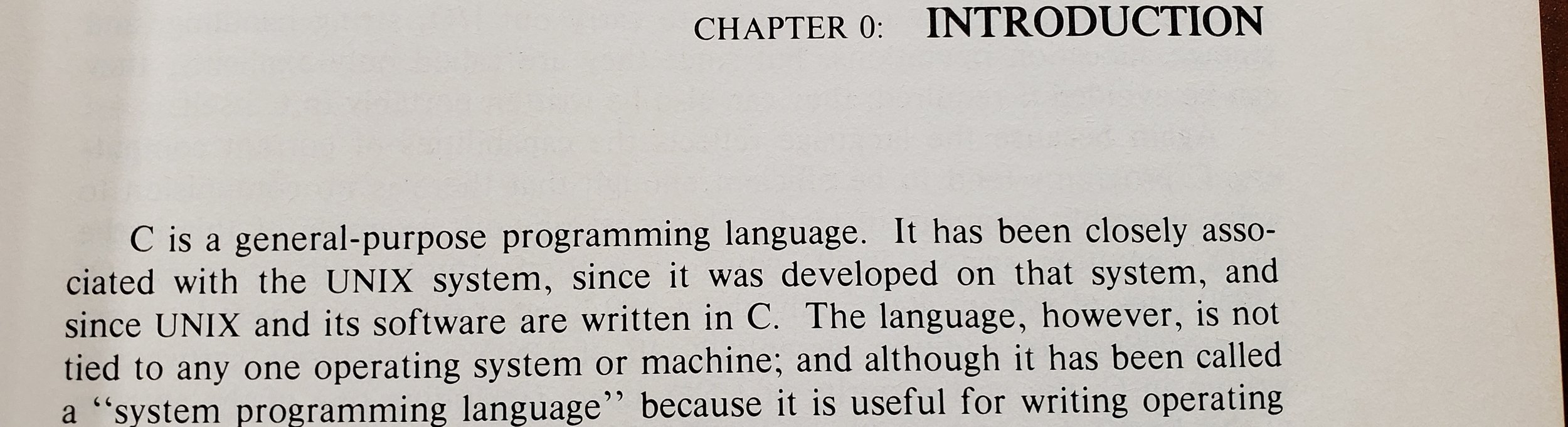

systemctl start FAHClientAnd now, we have control! The client's control page is accessible from a URL constructed using either the machine's hostname or IP address. With a machine name of "folding1", we can access it here:

http://folding1.bfs.lan:7396And a pretty picture to prove it:

If you want to join Bridge Fusion Systems’ team, use team number 243174. Happy folding!

Some advanced setup

Well, we do have two servers, so the next step I took was to clone the container holding the F@H Client and migrate it to the other server. With Proxmox, this is very easy:

Access to the web interface still seemed a little clunky to me (who wants to remember port 7396?). So, I went the extra mile and created a reverse HTTP proxy to provide a nice, user-friendly URL to access the page from. I fired up another container in Proxmox to provide a reverse proxy service to forward connections as follows:

http://fah1.bfs.lan => http://folding1.bfs.lan:7396I installed nginx in the container:

apt install nginxThen got the reverse proxy up and running using the following configuration file:

vi /etc/nginx/sites-available/fah1.bfs.lanContents:

server {

listen 80;

listen [::]:80;

server_name fah1.bfs.lan;

location / {

proxy_pass http://folding1.bfs.lan:7396;

proxy_set_header Host $host;

}

}I also copied this file to provide proxy service for the second Folding@Home instance on the other server (fah2.bfs.lan).

Test and reload the nginx configuration:

nginx -t

nginx -s reloadOne final step: Create an alias for "fah1" and “fah2” in our DNS Resolver to point to the reverse proxy IP address. Here at BFS, we use pfSense for this task:

Now, we can access the control/status pages via:

http://fah1.bfs.lanhttp://fah2.bfs.lanFinal Notes

● CAUTION: The configuration above will allow any computer on your network to change settings for your client. Our folding client is on a local network, and we trust everyone here to not mess with it.

● I think there are configuration settings for requiring a password to change client settings, but it may also restrict viewing the client status as well.

● Normally, you’d want a reverse proxy to provide SSL service, and even redirect HTTP requests to HTTPS, but for this example it would require more effort than we wanted to put into it (LetsEncrypt certificates, more complex proxy configuration, etc).